Greetings from Berkeley, where we’ve recently welcomed a merry band of cryptographers for what promises to be an outstanding summer program on...

We’re delighted to share that Miller fellow and Simons Institute Quantum Pod postdoc Ewin Tang has been awarded the 2025 Maryam Mirzakhani New...

This month, we held a joint workshop with SLMath on AI for Mathematics and Theoretical Computer Science. It was unlike any other Simons Institute...

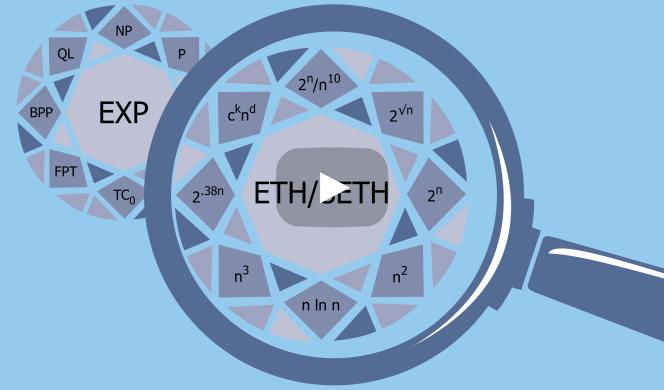

In this month’s newsletter, we’re highlighting a 2015 talk by Chris Umans on some of the then state-of-the-art approaches to bound the matrix multiplication exponent, an evergreen fundamental topic that will be the focus of one of our upcoming program workshops in October 2025.

Greetings from Berkeley! We are gearing up for a busy wrap-up of the spring semester, with five back-to-back workshop weeks at the Simons Institute. And after a brief breather during which we will execute a planned upgrade of our auditorium’s A/V system, we will resume in mid-May for a bustling summer featuring a Cryptography program and a Quantum Computing summer cluster.

Irit Dinur's journey through mathematics and computer science led her to become the first woman professor at the Institute for Advanced Study School of Mathematics.

In this early February talk, Sasha Rush (Cornell) delves into the transformative impact of DeepSeek on the landscape of large language models (LLMs).

Expanders are sparse and yet highly connected graphs. They appear in many areas of theory: pseudorandomness, error-correcting codes, graph algorithms, Markov chain mixing, and more. I want to tell you about two results, proven in the last few months, that constitute a phase transition in our understanding of expanders.

In this inaugural lecture of the Berkeley Neuro-AI Resilience Center, Daniel Jackson (MIT EECS), renowned author of Portraits of Resilience, shared his exploration of how resilience shapes human experience.

In this episode of our Polylogues web series, Science Communicator in Residence Anil Ananthaswamy talks with machine learning researcher Andrew Gordon Wilson (NYU). The two discuss Wilson’s work connecting transformers, Kolmogorov complexity, the no free lunch theorem, and universal learners.

Greetings from Berkeley, where we are nearly halfway through the second semester of our yearlong research program on large language models. In early February, the program hosted a highly interdisciplinary workshop with talks covering a host of topics, including one by program organizer Sasha Rush on the then-just-released DeepSeek, which we’re featuring in our SimonsTV corner this month. In broader theory news, the 2025 STOC conference announced its list of accepted papers in early February; based on both the record number 200-plus papers and the significant leaps made across the spectrum of theoretical computer science, I’m delighted and proud that the field is alive and kicking!

Ten years ago, researchers proved that adding full memory can theoretically aid computation. They’re just now beginning to understand the implications.

In this episode of our Polylogues web series, Spring 2024 Science Communicator in Residence Ben Brubaker sits down with cryptographer and machine learning researcher Boaz Barak (Harvard and OpenAI). The two discuss Boaz’s path to theoretical computer science, the relationship between theory and practice in cryptography and AI, and specific topics in AI, including generality, alignment, worst- vs. average-case performance, and watermarking.