Abstract

A Few Comments (& Questions!) from a Condensed Matter Physicist

Daniel Fisher, Stanford University

Non-Adaptive Evolvability

Jeff Clune, University of Wyoming

I summarize two separate papers that document cases where evolution failed to evolve evolvability, even though it would have benefitted from doing so. Instead, in both cases, evolvability arises because of an accidental constraint of physics.The first documents that evolution fails to optimize mutation rates for long-term adaptation on rugged fitness landscapes [1]. The second documents that evolution does not evolve modularity in networks (e.g. genetic or neural) unless there exists a cost for network connections, even though such modularity improves performance and evolvability [2]. Together, these papers raise the question of how many instances of evolvability in nature arise as a by-product of some other force. The papers also provide instructions to engineers that wish to harness evolution to produce artificial intelligence as to how to increase the evolvability of evolutionary algorithms.

[1] Clune J, Misevic D, Ofria C, Lenski RE, Elena SF, and Sanjuán R (2008)

Natural selection fails to optimize mutation rates for long-term adaptation on rugged fitness landscapes. PLoS Computational Biology 4(9): e1000187.

[2] Clune J, Mouret J-B, Lipson H (2013)

The evolutionary origins of modularity. Proceedings of the Royal Society B. 280: 20122863.

Complexity of Evolutionary Equilibria in Static Fitness Landscapes

Artem Kaznatcheev, McGill University

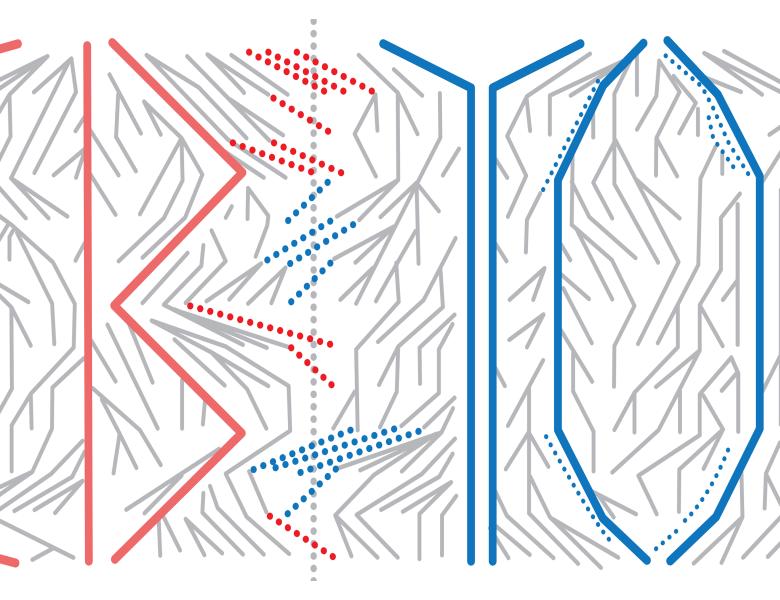

A fitness landscape is a genetic space—with two genotypes adjacent if they differ in a single locus—and a fitness function. Evolutionary dynamics produce a flow on this landscape from lower fitness to higher; reaching equilibrium only if a local fitness peak is found. I use computational complexity to question the common assumption that evolution on static fitness landscapes can quickly reach a local fitness peak. I do this by showing that the popular NK model of rugged fitness landscapes is PLS-complete for K >= 2; there are NK fitness landscapes where every adaptive path from some vertices is of exponential length. Alternatively—under the assumption that there are problems in PLS not solvable in polynomial time—this means that there are no evolutionary dynamics (known, or to be discovered, and not necessarily following adaptive paths) that can converge to a local fitness peak on all NK landscapes with K = 2. Further, there exist single-peaked landscapes with no reciprocal sign epistasis where the expected length of an adaptive path following strong selection weak mutation dynamics is exp(O(n^1/3)) even though an adaptive path to the optimum of length less than n is available from every vertex. Finally, I discuss the effects of approximate equilibria and a connection to the long term E.coli evolution project.

preprint: http://arxiv.org/abs/1308.5094

Everything not Forbidden is Allowed

Aviv Bergman, Albert Einstein College of Medicine

Explaining Adaptation in Genetic Algorithms with Uniform Crossover

Keki Burjorjee, Zite Inc.

Hyperclimbing is an intuitive, general-purpose, global optimization heuristic applicable to discrete product spaces with rugged or stochastic cost functions. The strength of this heuristic lies in its insusceptibility to local optima when the cost function is deterministic, and its tolerance for noise when the cost function is stochastic. Hyperclimbing works by decimating a search space, i.e., by iteratively fixing the values of small numbers of variables. The hyperclimbing hypothesis posits that genetic algorithms with uniform crossover (UGAs) perform optimization by implementing efficient hyperclimbing. Proof of concept for the hyperclimbing hypothesis comes from the use of an analytic technique that exploits algorithmic symmetry. By way of validation, we will present experimental results showing that a simple tweak inspired by the hyperclimbing hypothesis dramatically improves the performance of a UGA on large, random instances of MAX-3SAT and the Sherrington Kirkpatrick Spin Glasses problem. An exciting corollary of the hyperclimbing hypothesis is that a form of implicit parallelism more powerful than the kind described by Holland underlies optimization in UGAs. The implications of the hyperclimbing hypothesis for Evolutionary Computation and Artificial Intelligence will be discussed.