Abstract

Domain-independent planning is a classical AI task. For a specified set of objects and predicates, with some initial valuation, an action model is given that describes a set of actions in terms of their preconditions and effects; that is, respectively, properties that the domain must satisfy for an action to execute (e.g., a conjunction), and the changes to the values of predicates that result from the execution. A goal property is also given in terms of the predicates and objects. The domain-independent planning task is then, given this initial valuation, action model, and goal, to output a straight-line program consisting of actions that results in the satisfaction of the goal.

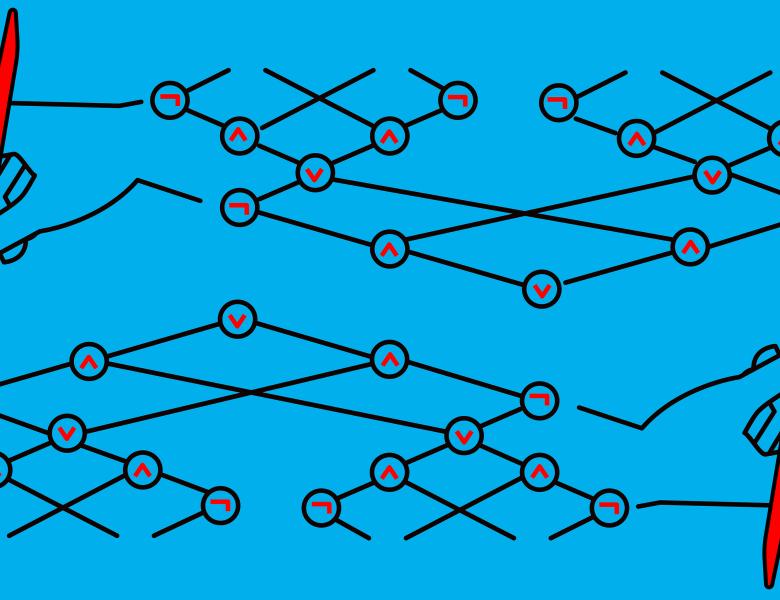

Here, we focus on the task of learning an action model from example executions. We propose a formulation of this task in the spirit of distribution-free PAC learning, that permits an arbitrary domain-independent planner to safely use the action model we produce. Safety here means that we guarantee that any plan produced by the planner executes in the actual action model, i.e., the actual preconditions are satisfied at each step, and results in the satisfaction of the given goal in the actual action model. We show that there are polynomial-time algorithms for safe learning of action models expressible in the classical STRIPS formalism, for example. We will also show that many richer families of domains are not safely learnable.

Based on joint works with Hai Son Le, Argaman Mordoch, and Roni Stern.