Image

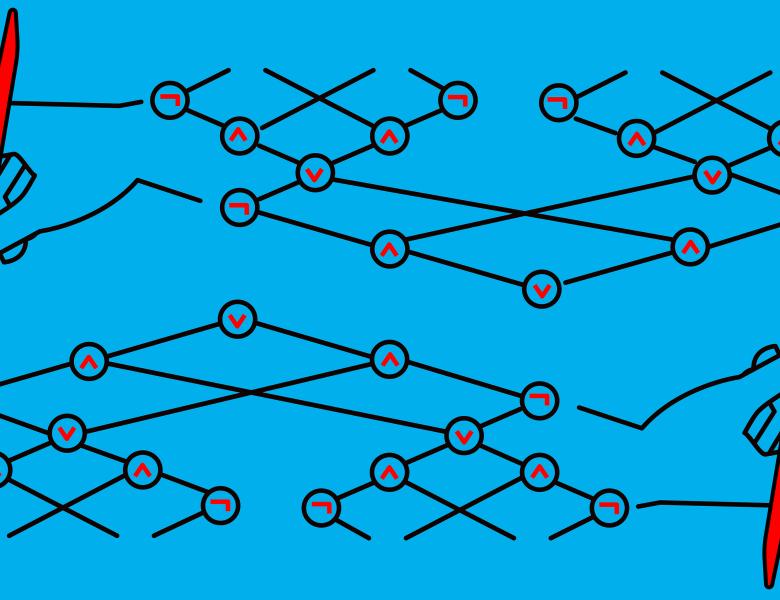

We present a Deep Neural Networks (DNN) architecture that is succinct: with a constant number of parameters, it is capable of representing any constant-sized Turing machine. In contrast, most prior DNNs are non-uniform in the sense that they require polynomial size to represent computations. One consequence is that our simple architecture can provably learn as well as any efficient learning algorithm, with simple random initializations. Our architecture combines recurrent and convolutional neural networks and builds on work of Abbe and Sandon [2020].