Abstract

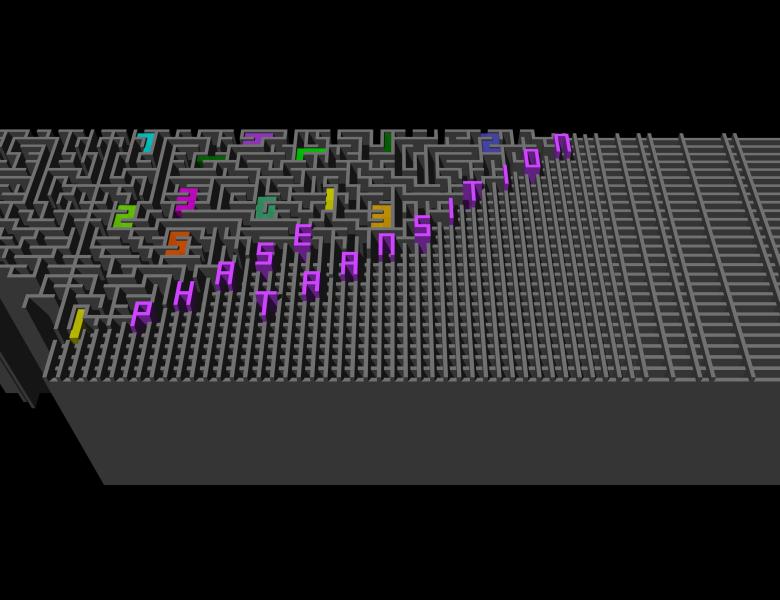

We introduce factorization of certain randomly generated matrices as a probabilistic model for unsupervised feature learning. Using the replica method we obtain an exact closed formula for the minimum mean-squared error and sample complexity for reconstruction of the features from data. An alternative way to derive this formula is via state evolution of the associated approximate message passing. Analyzing the fixed points of this formula we identify a gap between computationally tractable and information theoretically optimal inference, associated to a first order phase transition. The model can serve for benchmarking generic-purpose feature learning algorithms, its analysis provides insight into theoretical understanding of unsupervised learning from data.