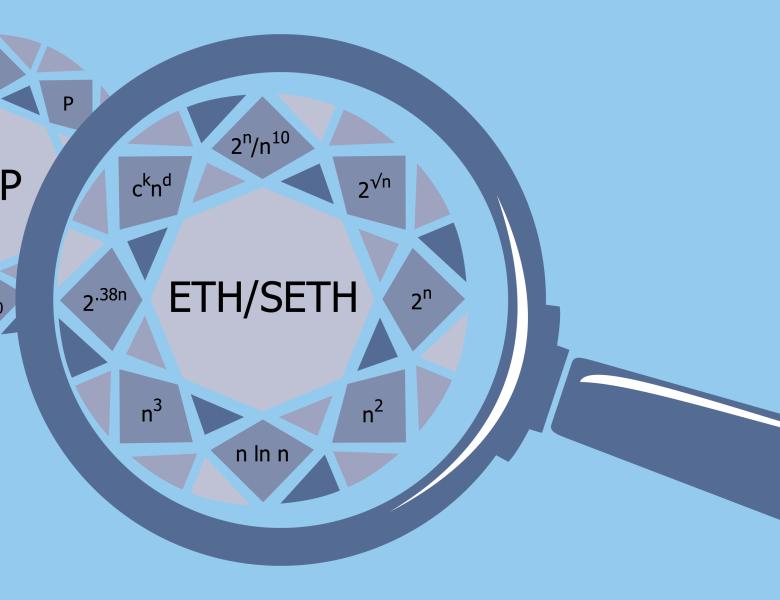

The hardness-versus-randomness paradigm shows that in sufficiently general models of computation that constructing explicit functions hard for the model is equivalent to constructing pseudorandom generators fooling that model of computation. This paradigm is even known to hold for algebraic circuits, which is the most natural computational model for computing (multivariate) polynomials. However, most algebraic circuit classes where explicit hard functions are known are unfortunately too weak to instantiate this connection. Fooling such circuit classes (deterministically deciding whether such circuits compute the zero polynomial, aka the polynomial identity testing problem) is typically only achieved through further non-trivial study of the lower bound technique.

We will discuss one recent method of turning lower bounds into such derandomization. This method requires a "robust" lower bound, which is one that proves not only that a polynomial f(x) is hard, but also that f(x)+g(x) is hard whenever g(x) is a "lower order term". Important lower bound techniques (such as Nisan's partial derivative matrix, the partial derivative method of Nisan-Widgerson, and the recent shifted partial derivative method of Kayal/Gupta-Kayal-Kamath-Saptharishi) can all be shown to be robust in certain cases, thus yielding various derandomizations of polynomial identity testing.

In particular, the robustness of the shifted partial derivative method (new to this work) can be pushed to yield the first efficient deterministic algorithm for deciding whether a constant-degree polynomial divides a sparse polynomial.