Abstract

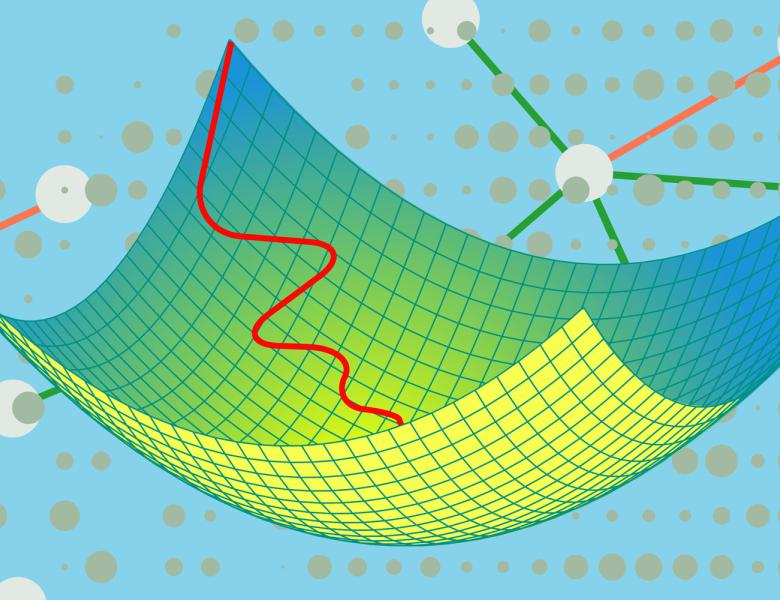

I will discuss recent results on learning a single neuron with ReLU and other activation functions, in the agnostic (i.e., adversarial label noise) setting. The key ingredient of this work is a surrogate stochastic convex optimization problem that we show can be solved with low sample and computational complexity while achieving near-optimal error guarantees. Our results are enabled by local error bounds ("sharpness") from optimization theory that we establish for this problem under mild distributional assumptions that capture sub-exponential, heavy-tailed (with polynomial tails), and even some discrete distributions. Surprisingly, for all these distributional families, the constant in the error bound is an absolute constant — independent of the problem dimension. This is quite a rare property to hold for optimization problems.