Abstract

The PAC-learning model is stringently worst-case; it requires learning all concepts in some class, over all distributions of examples. As a result, it has proven very difficult to develop efficient PAC-learning algorithms (outside simple concept classes). Additionally, attempts to at least construct cryptographic primitives based on the hardness of PAC-learning have failed. Heuristic PAC-learning relaxes PAC-learning by requiring only that most of the concepts in the class are efficiently PAC-learnable with respect to all (or perhaps specific) example distributions. This setting has enabled recent progress on connections between learnability and cryptography. For example, Nanashima (COLT `21) obtained a polynomial time, distribution-specific heuristic PAC-learning algorithm for polynomial size circuits, conditional on the nonexistence of infinitely-often one-way functions. It remains an important open problem to obtain worst-case PAC learning from nonexistence of standard cryptographic primitives.

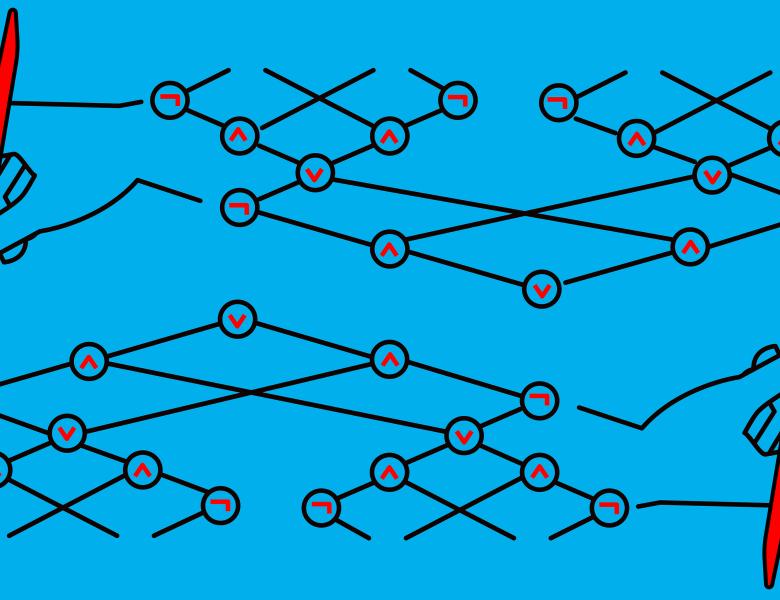

In this talk, we will discuss new approaches to strengthening the connections between heuristic PAC-learning and cryptography. Specifically, we will discuss how to use the construction of pseudo-random functions from families of pseudo-random synthesizers due to Naor and Reingold (JCSS `99), to extend previous results in the area in three key ways:

(1) To prove that most polynomial size circuits are weakly PAC-learnable over most polynomial time samplable example distributions, under the assumption that (roughly) pseudo-random functions do not exist. This result strengthens Nanashima's by obtaining one PAC-Learning algorithm for most example distributions, while Nanashima's relied heavily on the flexibility afforded by the distribution-specific heuristic PAC-learning setting. However, our algorithm only reconstructs hypotheses that predict the concept with advantage slightly better than random guessing.

(2) To “scale-down” the result NC, in the sense that (roughly) most concepts "representable" by NC are shown to be weakly PAC-learnable with respect to most NC-samplable example distributions if no NC-computable pseudo-random functions exist (such an implication was previously only known for P). Also, by restricting to the uniform distribution over examples, we obtain a strong PAC-learning algorithm for the "scaled-down" case.

(3) To prove that the existence of “special-case” natural properties implies PAC-learning with random examples is possible for random concepts, in contrast to the work of Carmosino et al. (CCC `16) which relied on membership queries, but handled the worst-case.

This talk is based on joint work with Marco Carmosino.