Abstract

Non-convex optimization with local search heuristics has been widely used in machine learning, achieving many state-of-the-art results. It becomes increasingly important to understand why these algorithms can converge to high-quality solutions on typical data. The landscape of many objective functions in learning has been conjectured to have the geometric property that "all (or most) local optima are (approximately) global optima", and thus they can be solved efficiently by local search algorithms. However, establishing such property can be difficult.

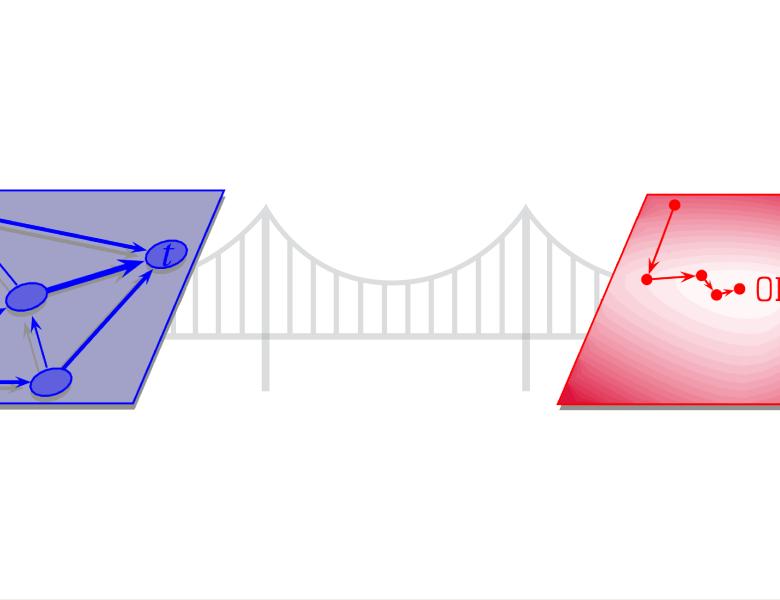

In this talk, I will showcase the mathematical techniques to establish results of this type. As a warm up, I will start with the top eigenvector problems and discuss its relation to the natural non-convex objective function for matrix completion. Then, we will focus on analyzing the optimization landscape of tensor decomposition using the Kac-Rice formula from random field and geometry. I will end with some conjectures along this line of research.