Abstract

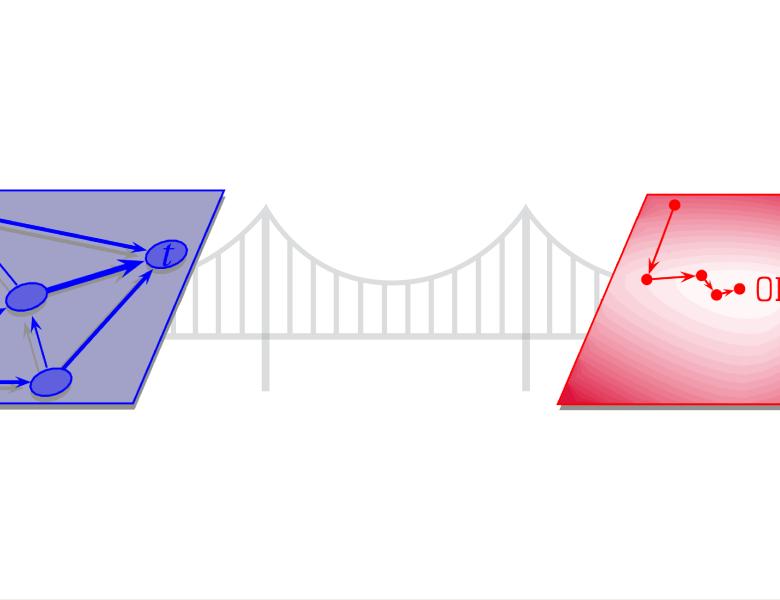

A simple yet powerful idea to deal with large-scale problems is to decompose them into smaller subproblems that are much easier to solve. We have seen the success of this idea such as SGD and coordinate descent. It is natural to apply the same idea to solve linearly constrained problems by an algorithm called ADMM (Alternating Direction Method of Multipliers). Unfortunately, when there are three blocks, ADMM was previously shown to be possibly divergent even for solving a linear system. In this talk, we discuss a new variant of ADMM, called RP-ADMM (Randomly Permuted ADMM) and prove its expected convergence for solving linear systems.

A main technical lemma is that the eigenvalues of the expected iteration matrix of RP-CD (Randomly Permuted coordinate descent) lie in (-1/3, 1). As a byproduct, the convergence speed of RP-CD can be reduced to an open question on matrix AM-GM inequality. Motivated by the proof technique, we also propose a new update rule named Bernollin-randomized rule. We will discuss some interesting numerical observations about RP-ADMM and a few other variants, which further validate the effectiveness of random permutation.