Abstract

In the last couple of years, a lot of progress has been made to enhance robustness of models against adversarial attacks. Current defenses, however, are developed for a particular attack (e.g. L2) and often have poor generalization to “unforeseen” attack threat models (the ones not used in training).

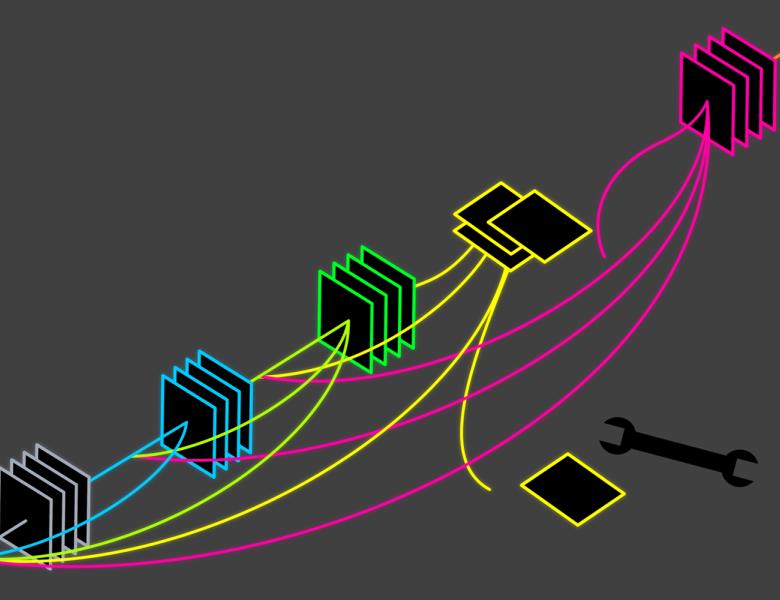

In this talk, I will present our recent results to tackle this issue. We present adversarial attacks and defenses for the perceptual adversarial threat model: the set of all perturbations to natural images which can mislead a classifier but are imperceptible to human eyes. The perceptual threat model is broad and encompasses L2, L_\infty, spatial, and many other existing adversarial threat models. However, it is difficult to determine if an arbitrary perturbation is imperceptible without humans in the loop. To solve this issue, we propose to use a “neural perceptual distance”, an approximation of the true perceptual distance between images using neural networks. We then propose the “neural perceptual threat model” that includes adversarial examples with a bounded neural perceptual distance to natural images. Remarkably, we find that the defense against the neural perceptual threat model generalizes well against many types of unseen Lp and non-Lp adversarial attacks.

This is a joint work with Cassidy Laidlaw and Sahil Singla.