Abstract

Given a set of points in d dimensions, imagine putting a Gaussian distribution around each of them. How quickly can we evaluate the sum of these Gaussian densities at a new point? This computational problem (and its generalization for densities other than the Gaussian) is called kernel density estimation. This problem arises as a basic primitive in statistics (non-parametric density estimation), machine learning (kernel methods) and scientific computing (physical simulations).

The batch version of this question (compute the sum of n kernels at m given query points) is addressed by the celebrated fast multiple method from the late 80s which has linear or near-linear complexity for points in 2 and 3 dimensions. The high dimensional case has been challenging because at a high level, typical space partitioning approaches have an exponential dependence on the dimension.

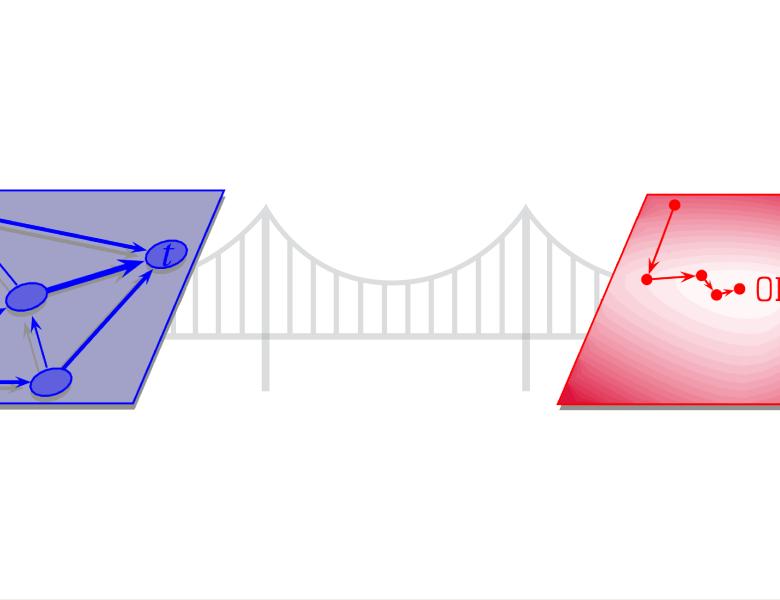

In this talk, I will show that locality sensitive hashing (introduced in the late 90s for the approximate nearest neighbor problem in high dimensions) can be adapted to devise unbiased estimators for kernel density in high dimensions.