Image

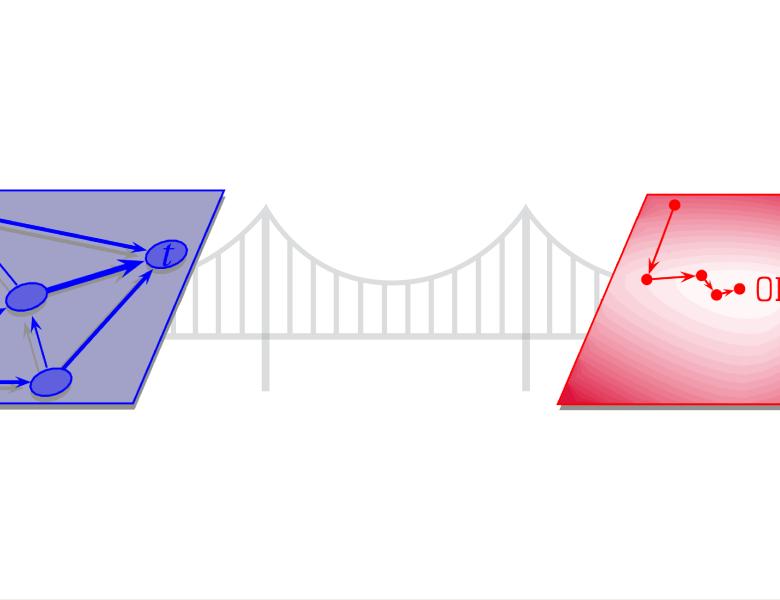

We will present a very general trust-region framework for unconstrained stochastic optimization which is based on the standard deterministic trust region framework. In particular this framework retains the desirable features such step acceptance criterion, trust region adjustment and ability to utilize of second order models.

We show that this framework has favorable convergence properties, when the true objective function is smooth.

We then show that this framework can be used to directly optimize a 01-loss function of an AUC of a classifiers, which are considered NP-hard in the finite sum case.