Abstract

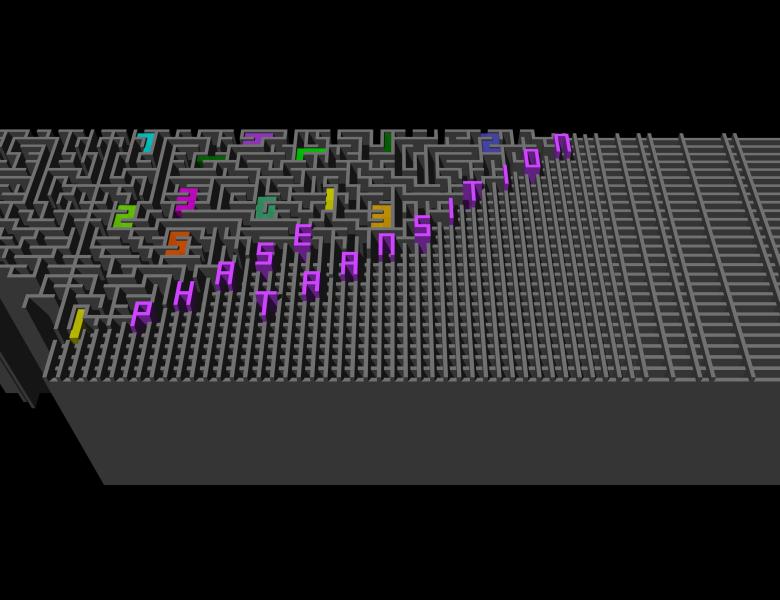

Over the past decade, a rich interaction has grown up between statistical physics and statistical inference. In particular, physics often predicts phase transitions where, if a data set becomes too sparse or too noisy, it suddenly becomes impossible to find its underlying structure, or even to distinguish it from a “null model” with no structure at all. For instance, in the community detection problem in networks, there is a transition below which the vertices cannot be labeled better than chance, and the network cannot be distinguished from an Erdos-Renyi random graph. Similarly, in the clustering problem in Gaussian mixture models, it becomes impossible to find the clusters, or even to tell whether clusters exist, i.e., to distinguish the data from a single Gaussian.

Many of the physics predictions have been made rigorous, but there is still a very lively interchange between the fields, both about these transitions and about asymptotically optimal algorithms. In particular, while efficient message-passing and spectral algorithms are known that succeed down to the so-called Kesten-Stigum bound, in a number of problems we believe that it is information-theoretically possible (but perhaps not computationally feasible) to go further. I will give a friendly introduction to this field, and present some new results proving this conjecture.

This is joint work with Jess Banks, Joe Neeman, Praneeth Netrapalli, Roman Vershynin, and Jiaming Xu.