Image

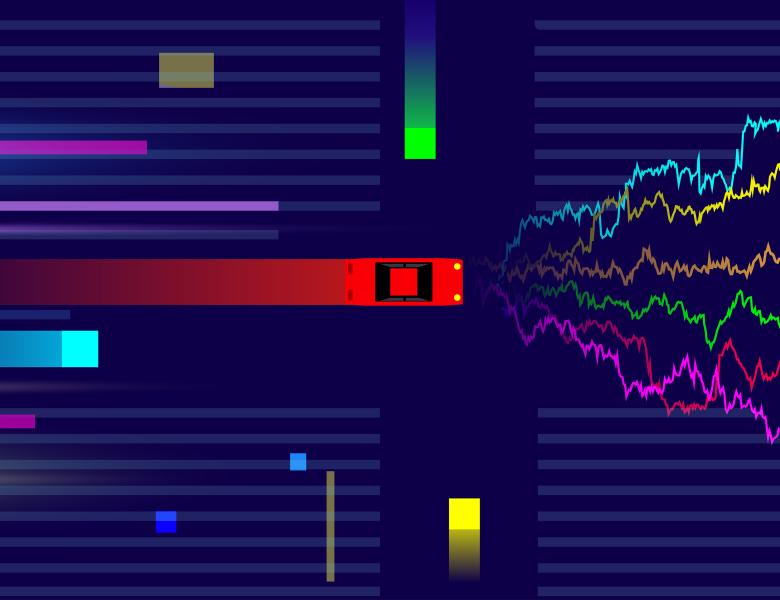

As discussed in the previous talks, the main paradigms for online learning with partial information posit either that the reward distribution is i.i.d. across rounds (stochastic bandits) or completely arbitrary (adversarial bandits). This tutorial will focus on recent developments in hybrid models and corresponding algorithms that aim to shed light on the space in between those two extremes.