Many estimations problems have an interesting structure even in the random case. For instance reconstructing a vector from random linear projections (as in compressed sensing or in coding theory), recovering the low rank structure of a matrix or a tensor polluted by random noise (sparse PCA, planted clique, sub-matrix localisation, clustering of mixtures of Gaussians...)

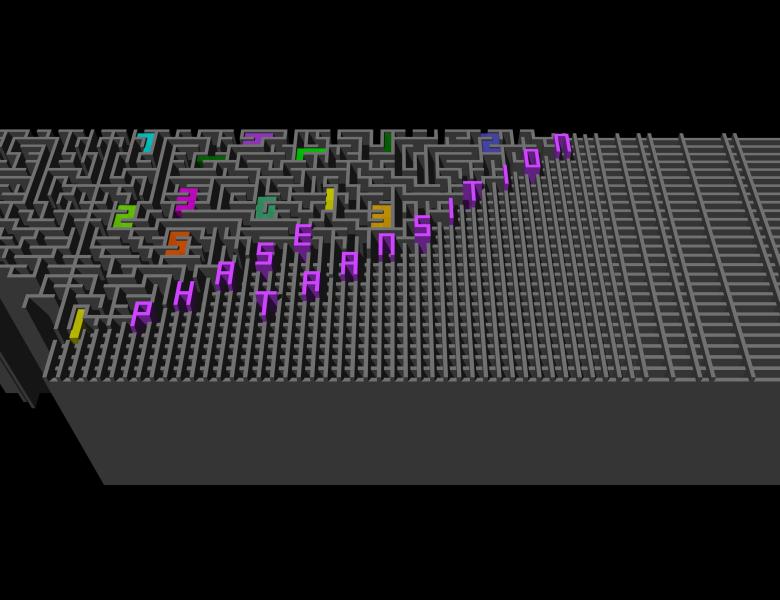

There are now a number of such estimations problems for which we know how to prove the replica predictions for the mutual information, the MMSE and the phase transitions. I will discuss how recent ideas from statistical physics, mathematical physics and information theory have led, on the one hand, to new mathematical insights in these problems, leading to a characterisation of the optimal possible performances, and on the other to the understanding of the behaviour of approximate message passing, an algorithm that turns out to be optimal for a large set of problems and parameters.