Theory at the Institute and Beyond, February 2023

by Venkatesan Guruswami (Simons Institute)

This semester at the Simons Institute, the Meta-Complexity program is buzzing along with intense activity in the form of multiple reading groups and a weekly seminar, on top of the usual three workshops and boot camp. A large number of complexity theorists have converged at the Institute, including several students, postdocs, and junior researchers. And all this complexity action is intermixed with some good and varied fun in the form of many self-organized social activities.

Theory readers know what complexity is, but what is the “meta” for? An online dictionary definition of “meta” is a term describing something that “consciously references or comments upon its own subject or features” — e.g., “A movie about making a movie is just so meta—especially when the actors criticize the acting.”

So meta-complexity is the study of complexity of problems that themselves pertain to complexity. A prototypical example is circuit minimization, where the goal is to find the smallest circuit for a given specification. This is modeled as the minimum circuit size problem (MCSP), which is one of the lead actors in meta-complexity: Given the truth table of an n-bit Boolean function f, and a size parameter s, does f have a Boolean circuit of size at most s? (Note that the input size is 2n.)

The MCSP problem was explicitly defined in 2000 by Kabanets and Cai, inspired by Razborov and Rudich’s seminal work on natural proofs from 1994. (Upon some reflection, one can realize that a natural property against some class of circuits is really an average-case, one-sided error algorithm for MCSP on circuits of that class.) Being a “natural” problem, MCSP was in fact considered implicitly even earlier, including in early works on complexity in the former Soviet Union. Yablonski claimed in 1959 that MCSP is not in P (to use modern terminology — the class P wasn't even defined then). MCSP is easily seen to be in NP — indeed, one can guess a circuit and then check if it computes the function correctly on all inputs — so we of course can’t yet prove that it lies outside P.

Leading up to his seminal work on NP-completeness, Levin was in fact trying to show NP-hardness of MCSP, but didn’t succeed. One of the six problems that Levin showed NP-hardness of was DNF-MCSP, where one tries to find a DNF formula of minimum size to compute a given truth table. (Technically, Levin proved only NP-hardness of the partial-function version of the question, where we don't care about the function value on some inputs.) In fact, this was Problem 2 on Levin’s list, between Problem 1, which was set cover, and Problem 3, which was Boolean formula satisfiability.

The complexity of MCSP remains open — we do not know if it is in P or NP-complete. Resolving this is a natural challenge, but on the surface it might seem like a rather specific curiosity. One reason to care about MCSP is that any NP-hardness proof faces some fundamental challenges — in particular, the function produced on No instances must have large circuits, giving an explicit function in EXP that has large circuits, which seems beyond the reach of current techniques. One might try to circumvent this via randomized or exponential-time reductions, and this has indeed fueled some very interesting recent results, as well as intriguing connections to learning theory, average-case complexity, cryptography, and pseudorandomness.

An “extraneous” reason to care about MCSP and meta-complexity is that the underlying techniques have led to some of the best insights on certain foundational questions that have nothing to do with meta-complexity per se. Meta-complexity has been energized by some significant recent progress on both of these (internal and extraneous) fronts. These advances and momentum are fueling a lot of activity and optimism in the Simons Institute program this semester.

Impagliazzo’s worlds

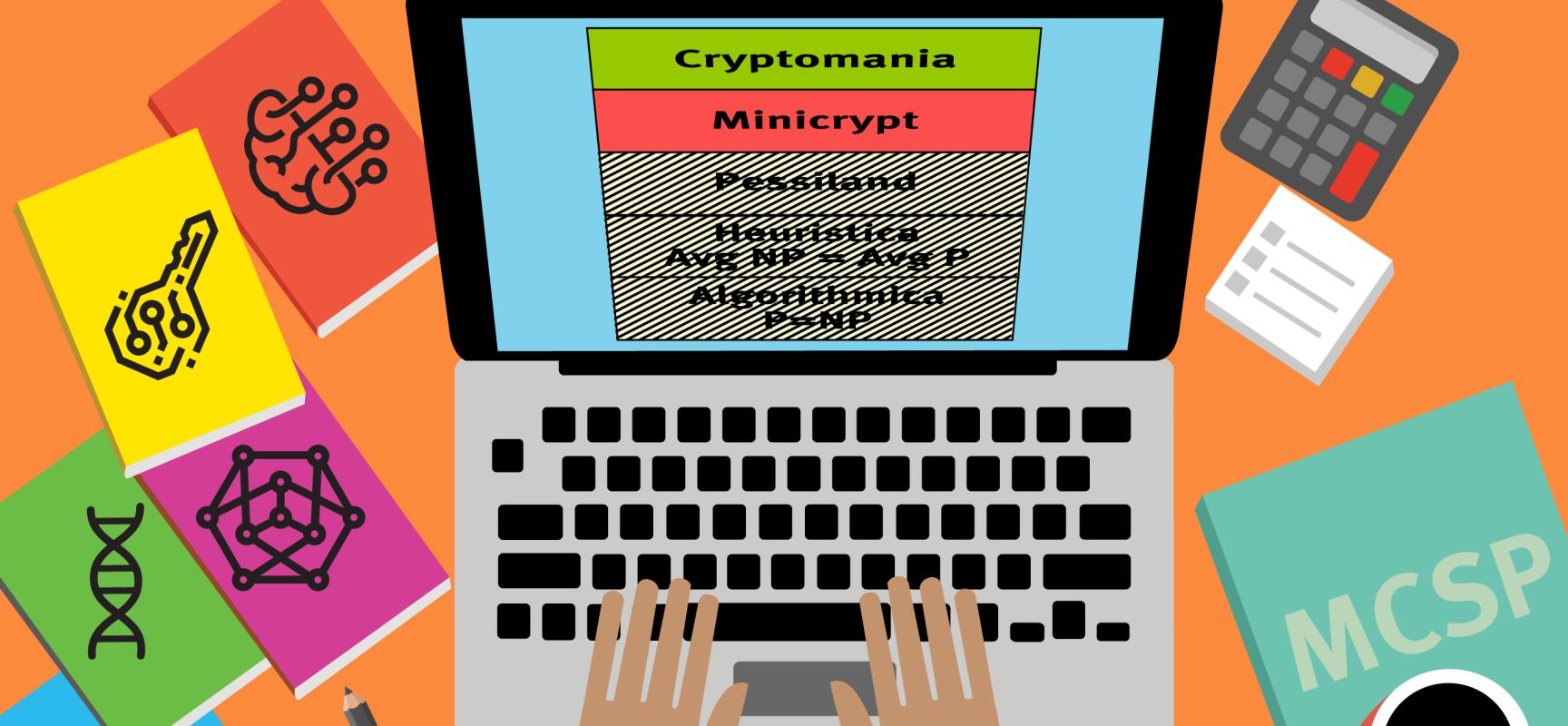

I will now discuss some of the broader complexity ramifications of meta-complexity, as evidenced by some exciting recent works. To do so, we take a brief tour into five possible complexity-theoretic worlds we might live in, as put forth in an insightful and influential survey by Impagliazzo from 1995. These complexity-theoretic scenarios, in increasing strength of intractability, are Algorithmica, Heuristica, Pessiland, Minicrypt, and Cryptomania. Presently, we can’t exclude any of these scenarios, though the general working belief is that we have secure public-key cryptosystems and thus live in Cryptomania.

Ruling out each of these possible worlds corresponds to a central open question in complexity theory: Is P ≠ NP (rules out Algorithmica)? Does P ≠ NP imply that NP is hard on average (rules out Heuristica)? Does average-case hardness of NP imply the existence of one-way functions (rules out Pessiland)? Does the existence of one-way functions imply public-key cryptography (rules out Minicrypt)?

While these questions are all still very much open and seemingly out of reach of current techniques, meta-complexity has given some very interesting results, and perhaps the best hope so far toward the challenge of ruling out Heuristica and Pessiland. This, in turn, is related to the long-standing and elusive goal of basing cryptography on as weak an assumption as P ≠ NP.

In the spirit of ruling out Heuristica, a striking result of Hirahara (from STOC 2021) gave a worst-case to average-case reduction, showing that certain strong but plausible worst-case lower bounds (namely that UP is not solvable by exp(O(n/log n)) time deterministic algorithms) imply the average-case intractability of some NP problem (formally, DistNP is not contained in AvgP). It is important to note that meta-complexity does not figure anywhere in the statement of this theorem. Yet it plays a crucial role in the proof, which is based on the hardness of a gap version of computing the time-bounded Kolmogorov complexity of a string given an NP oracle. A key tool that is used to analyze Kolmogorov complexity is the direct product generator, which is a standard pseudorandom generator construction based on the encoding of a hard function using a list-decodable code. In general, meta-complexity has rich connections to pseudorandomness, and this is another powerful illustration of that.

Meta-complexity has also yielded some impressive recent results on the challenge of ruling out Pessiland (which, as the name suggests, is the worst of possible worlds, where we have average-case hard problems and yet there is no cryptography). Liu and Pass (FOCS 2020) proved the striking characterization that one-way functions (OWFs) exist if and only if computing the time-bounded Kolmogorov complexity is mildly hard-on-average. Thus, meta-complexity is in a precise sense necessary to establish the existence of OWFs. In a recent CCC 2022 paper, Liu and Pass show a similar connection between OWFs and the mild average-case hardness of the problem of computing the conditional time-bounded Kolmogorov complexity, but now they further show this conditional version to be NP-hard. This means that the holy grail of basing OWFs on the worst-case hardness of NP would follow if we could show a worst-case to average-case reduction for this meta-complexity problem! Even if this latter task proves elusive, the fact that OWFs are so intrinsically connected to meta-complexity is fascinating.

What about MCSP itself?

Returning to the complexity of MCSP itself, a beautiful recent result by Hirahara (FOCS 2022) shows the NP-hardness under randomized reductions of the partial-function variant (called MCSP*) of MCSP, a question that has been open since Levin’s work 50 years ago (recall that Levin showed NP-hardness of the DNF version of MCSP*). The new MCSP* result and the barriers it overcomes have the community very excited. MCSP* was also shown to be hard assuming the exponential time hypothesis in earlier work of Ilango. The approach of first establishing hardness for the partial variant and then further reducing it to the total-function variant successfully yielded hardness results for DNF-MCSP and Formula-MCSP. Whether one can similarly further reduce MCSP* to MCSP, however, remains unclear.

The NP-hardness result for MCSP* and the underlying proof approach are extremely interesting on their own. An intermediate result, of significant interest already, is a hardness result for PAC learning via efficient programs. Specifically, given a distribution of example-label pairs, the goal is to determine whether there is a program of size s running in polynomial time that fits the data (i.e., it agrees with all example-label pairs). This problem is called MINLT, and it was known (Ko, 1991) that no relativizing proof of the hardness MINLT is possible. Hirahara shows NP-hardness of MINLT under randomized reductions, overcoming this barrier. The reduction is from the minimum monotone satisfying assignment (MMSA) problem, where given a monotone formula F, the goal is to find a satisfying assignment to F of minimum Hamming weight. This problem generalizes set cover (which is the CNF version of the problem), and strong PCP-based inapproximability results are known for it.

The goal of the reduction is to sample examples (x,b) so that a low-weight satisfying assignment to F yields a short (and efficient) program that correctly computes b on every sample x, whereas if every satisfying assignment of F has high weight, then no small program can do so (in fact, the reduction will ensure the program can’t predict the label b better than essentially random guessing). The reduction ingeniously uses secret sharing (a basic notion in information-theoretic cryptography), and the analysis hinges on insights from Kolmogorov complexity and algorithmic information theory.

An n-party secret-sharing scheme for a bit b distributes shares among n parties such that certain “qualified” subsets of parties are able to reconstruct b by combining their shares, whereas the remaining subsets have no idea about b based on their shares. One commonly used choice of qualified subsets is all subsets of t or more parties. But secret-sharing schemes exist corresponding to any monotone formula F on n bits, with qualified subsets corresponding to precisely the satisfying assignments of F.

The reduction from a formula F (that’s an instance of MMSA) to the PAC learning problem proceeds as follows. First, it samples some random strings r1,r2,…,rn (recall that the reduction is randomized) whose purpose will be to mask the shares of the secret-sharing scheme. The distribution on (x,b) that the reduction produces is as follows.

- It samples a random bit b and computes the shares s1,s2,…,sn to hide b per some secret-sharing scheme corresponding to the monotone formula F.

- The input x masks the share si using the output of a pseudorandom generator on input ri for every i. (This is done by sampling some seeds zi, and including zi, si + G(ri,zi) for some pseudorandom generator G.)

If setting a subset T of bits to 1 makes the formula F true, then the corresponding shares suffice to reconstruct b, and a program that has rj for all j in T hard-coded will then be able to compute b. This yields the completeness of the reduction. The soundness is subtle, and is based on showing that the only way a program P can compute b is to “know” the random strings rj corresponding to some qualified subset, and must thus be large if all qualified subsets are large (which in turn must be the case if all satisfying assignments to F have large Hamming weight). The soundness analysis is accomplished using an algorithmic information extraction lemma, after suitably formalizing what it means for a program P to “know” some string.

Returning to MCSP*, one can convert an instance of MINLT to a partial function f that’s an instance of MCSP* by stipulating f(x) = b for any pair (x,b) in the support of the MINLT distribution, and leaving f unspecified on the remaining inputs. However, we need to reduce the number of input bits to f from poly(n) to O(log n), so that the truth table produced will be of polynomial size. This is achieved using several other ideas, including PCPs and the Nisan-Wigderson pseudorandom generator, and an efficient circuit to compute the direct product of any function.

The myriad connections of meta-complexity to learning and pseudorandomness must be apparent from the above discussion. Meta-complexity enjoys many deep connections to proof complexity and meta-mathematics more broadly, namely the difficulty of proving mathematical theorems such as circuit lower bounds in various logical systems. The upcoming workshop in March, Proof Complexity and Meta-Mathematics, focuses precisely on this theme.

Arithmetic progressions in dense integer sets

Turning to theory beyond meta-complexity and the Simons Institute, the 150-plus strong list of accepted papers to STOC 2023 was announced this month. It features many amazing results spanning the full breadth of theoretical computer science, and TheoryFest should be a more exciting (and certainly more affordable) local attraction than Disney World this June. I might discuss some of the results that caught my eye in a later column, as the present column has already gotten quite long.

Nevertheless, I can’t resist mentioning one spectacular result that was announced this month, making incredible progress on one of the classic questions in extremal and additive combinatorics, namely the size of AP-free sets of integers. What is the asymptotically largest subset A of {1,2,…,N} that can one pick while avoiding a nontrivial 3-term arithmetic progression (3-AP)? That is, if there are a,b,c in A with a+c=2b, then we must have a=b=c, and we would like to make A as large as possible subject to this requirement. This and similar questions are of interest in the realm of “structure vs. randomness” in pseudorandomness, so they bear some natural appeal to complexity theorists.

A construction by Behrend from 1946 gives a 3-AP-free set of density (i.e., size normalized by n) about exp(-(log N)1/2), and no set of larger density is known. In terms of upper bounds, a long line of work showed that the density of a 3-AP-free is at most (log N)-1-c for some positive (but tiny) constant c. Kelley and Meka in one spectacular swoop improved the upper bound all the way to exp(-(log N)1/11), right in the ballpark of the Behrend construction!

The Behrend construction is an appealing one: one takes a set B of points in {1,2,…,M}m, all of which have Euclidean length r (so they lie on a sphere) — a pigeonhole argument guarantees a large B for some radius r. Since a line can intersect a sphere in at most two points, no three distinct points in B are collinear. One then maps B into a subset A of {1,2,…,N} for N = (2M)m by mapping a point b in B to the integer with base-(2M) representation equal to the coordinates of b. This ensures that a 3-AP in A must lead to a 3-term AP in B, and in particular three collinear points in B, which is impossible. Optimizing the choice of parameters M,m leads to a set A of density about exp(-(log N)1/2).

The Kelley-Meka proof is based on Fourier analysis, and is first executed in the model of the vector space Fqn for a prime field Fq (where 3-APs are called capsets), and then adapted with additional innovations to the integers case. This two-pronged approach is a common methodology in additive combinatorics, allowing the development of tools in the more tractable vector-space world first, and then extending them to integers via surrogates of subspaces like Bohr sets (as highlighted in this exposition of the Kelley-Meka proof in this mold). A few years ago, an ingenious use of the polynomial method led to stunning progress on the capset problem, leading to a short proof of an upper bound of O(2.756n) for q = 3, a vast improvement to the previous record upper bound of 3n/n1+c for some c > 0.

The polynomial method seemed specific to the finite field setting and unfortunately did not lead to any improvements to the integers setting. We can therefore for now mark this as a win for analytic methods. The simplicity of the polynomial/slice-rank method for capsets was inspiring, so I hope polynomials still have a trick up their sleeve that can be unearthed to show Behrend-type upper bounds on AP-free subsets of integers. After all, the Behrend construction is very algebro-geometric.