Research Vignette: On Sex, Math, and the Origin of Life

By Adi Livnat

One of the most fascinating kinds of mathematical work is to create a new mathematical framework where none has existed before. Two cases in point are Turing’s 1936 paper and von Neumann’s and Morgenstern's Theory of Games and Economic Behavior. In the Simons program on Evolutionary Biology and the Theory of Computing, an opportunity was presented for creating a new mathematical framework for evolution based on new ideas and empirical knowledge, with implications for wide-ranging topics—from sex to genetic disease to the origin of life.

Traditional evolutionary theory is based on the idea that mutation happens by accident in a given gene due to a copying error, radiation, or another unintended disruption. This mutation may be beneficial, deleterious, or neutral in terms of the effect that it has in and of itself on the fitness of its carrier. If it is beneficial, it is favored by selection and thus may spread from the individual in which it occurred to the population, bringing along with it the benefit or improvement in structure or behavior that it provides. If it is deleterious it likely goes extinct, and if it is neutral its frequency performs a random walk, called “random genetic drift,” ending with either extinction or fixation.

This accidental mutation mindset has left fundamental problems open from the start. First, can the accumulation of mutations, each supposedly serviceable on its own, even lead to the evolution of complex adaptation, where complementarity is key?

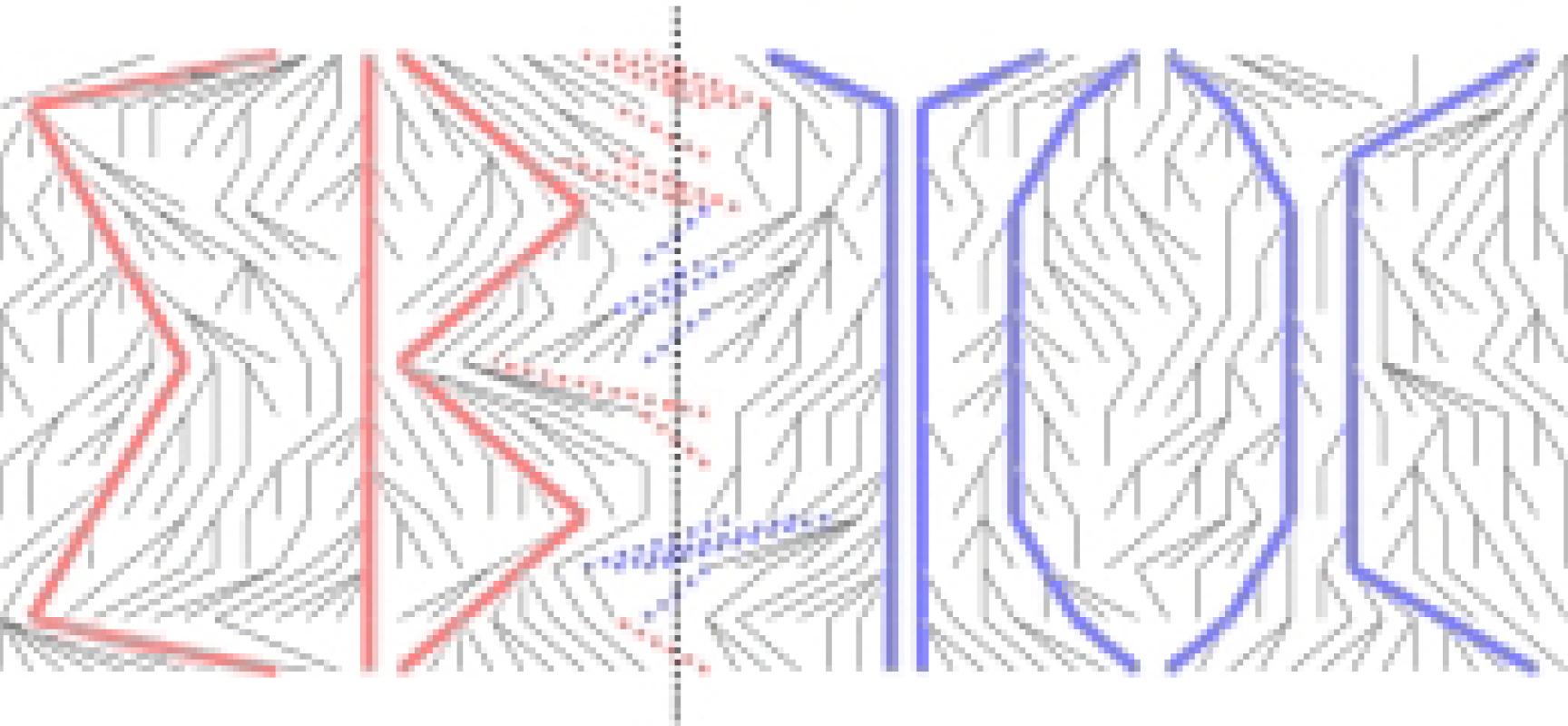

Second, there is nothing in the ideas above that gives us a straightforward reason to believe that the sexual shuffling of the genes is of extreme importance for evolution; yet empirically, it is. So why? A common, layman's answer is that sex creates a vast number of different combinations of genes that serve as fuel for selection—these are the individual genotypes. But these combinations are not heritable; just as sex puts them together, it also breaks them down.

Third, since the time when the ideas above were formed in the early 20th century, we have experienced a revolution in our empirical knowledge. We now know that mutation is affected by complex mechanisms that are guided to their places of action by homology and by DNA sequence and structure, and that contextual parameters influence the nature of the genetic change to occur. So why have we been believing that mutation is accidental?

The fourth problem is so troubling that few can contemplate it calmly—it is the problem of chance. Consider transposable elements, for example—genetic elements that move around and are copied and pasted over the genome over evolutionary time. Traditional thinking interprets them as little more than genomic parasites that are occasionally coopted for host use by chance. But could chance movements of genomic parasites actually explain the crucial part of the tying together of complex genetic networks of hundreds of genes underlying novel, complex adaptations that we now know to be done by transposable elements? Are we not invoking chance too often in our “explanations” of things?

The reason we have been believing that mutation is accidental has not been empirical. It has been theoretical. So far, there have been only two basic ideas: neo-Darwinism—the idea that accidental mutation and natural selection explain evolution as described; and Lamarckism—the idea that mutation could somehow respond directly to the immediate environment in a smart way. The rejection of the latter as a general theory made it seem as though mutation must be essentially accidental (with the addition that mechanisms of “evolvability” modulating it may have themselves evolved by accidental mutation and natural selection). But what if there was a third alternative? What if mutation can be essentially non-accidental yet not be of the Lamarckian kind?

We all know that accidental mutation can happen as a result of radiation and other disruptions, and that such mutation can lead to disease. But assume that the mutations that are relevant for the process of adaptive evolution under selection are biological—that they are outcomes of biological processes. This assumption unleashes a whole new way of looking at evolution.

With this assumption, mutations are outcomes of genetic interactions that occur in a heritable mode, namely in the germ cells. Much as genes interact in giving rise to a classical trait, they also interact in influencing genetic change.

Put in other words, mutation is an outcome of a process that combines information from alleles at multiple loci and writes the result of the combination operation into the locus of the mutation. This writing means that information from combinations of genes flows to future generations even though the combinations themselves are transient (because the “single locus” is not broken down by recombination). This addresses the aforementioned problems from a unifying perspective: Sex continually generates complex combinations of alleles across loci, selection acts on these combinations as complex wholes, and these combinations have heritable effects through the mutations that are derived from them.

Thus, once the problem of the role of sex is addressed in accordance with the layman's intuition, we can begin to talk about selection as acting on individuals as complex wholes, and non-accidental mutation has been the missing piece that allows sex to play a central role as the generator of allele combinations: It allows selection to act on genetic interactions, and not only on single alleles as atomistic units.

In addition, the question of how much chance is invoked in our explanations of adaptive evolution when we rely on accident as the source of mutation, which has not been amenable to the scientific method, is replaced with a question that is amenable to it: What is the detailed nature of the biological processes of mutation?

The connection to theoretical computer science is now clear: When mutation is an outcome of genetic interactions, we can think of the alleles at the multiple loci that affect mutation as inputs into the mutational process, and think of the mutation itself as the output of this process. Furthermore, since the output of a mutational event at one generation can serve as an input into mutational events at other generations, non-accidental mutation creates a network of information flow and computation across the genome and through the generations, from many genes into any one gene and from any one gene into many genes. This view of mutation as a computational event involving fan-in and fan-out, as opposed to a local accident, greatly extends the relevance of the computational lens for our understanding of nature.

Not only does this new conceptual structure explain diverse empirical facts not explained before, it also touches on deep questions like the origin of sex and the origin of life. Perhaps the most commonly known origin-of-life scenario, popularized by Dawkins in The Selfish Gene, holds that one day a self-replicating molecule appeared by chance. This molecule created a population of like molecules. Assuming accidental copying errors, this accidental molecule presumably led, through neo-Darwinian evolution, to all of life.

However, things could have been different. If evolution started with a single, chance-arising self-replicating molecule, then it started asexually (because the self-replicating molecule had no partners) and with accidental mutation (because there is no room for a higher-level phenotype that could affect mutations). This is incongruent with the hypothesis I have proposed above that sex and non-accidental mutation are essential. However, what is sex, most basically? It is the mixing of hereditary material. The free mixing of compounds that the image of the primordial soup entails fits just as well with a “sexual” as opposed to an “asexual” beginning, when the meanings of words are abstracted appropriately. That original, natural state of mixing could have gradually become formalized into the many sophisticated and advanced sexual mechanisms seen today. A complex world of chemical reactions could have gradually become more and more biological, and mutation could have always been an outcome of complex reactions, starting with chemical reactions that have gradually become more biological and that underlie what we call today mutations in genes. From this view, life had no particular point of “origin” in space and time; it is rather a gradual transition. The “origin” was mechanistic, and the physical world had the seeds of life.

The ideas above are also relevant for our understanding of genetic disease. Note that in recent years we have learned that both recurrent de novo mutations and genetic interactions are more important genetic factors of disease than we previously realized, and, in a general sense, both fit better with a theory of evolution that puts non-accidental mutation and genetic interactions at center-stage than with traditional theory. In particular, non-accidental mutation accounts for long-term mutational tendencies, and these in turn suggest evolutionary “friction points” between the mutational pressure to change in certain respects, itself formed by long-term evolution, and the need to perform under the current organismal constraints of structure and function. At these points, genetic disease repeatedly arises. Thus, long-term exposure to the malaria parasite may have caused mutational mechanisms to gradually home in on the hemoglobin genes, where recurrent mutations now both ameliorate malaria and cause blood disease. Similarly, autism, which has a strong genetic component, may be related to long-term selection, including sexual selection, for the evolution of the human brain.

The view outlined above emphasizing selection on transient combinations of alleles has created a line of research that has captured the interest of multiple members of the Simons program, yielding talks and works in progress whose common theme is selection on interactions. These include works on mixability and the multiplicative weight updates algorithm by Chastain, Livnat, Papadimitriou and U. Vazirani; on the substitution cost by Pippenger; on genetic diversity by Papadimitriou, Daskalakis, Wu and Livnat, and on connections between satisfiability and evolution by Wan, Rubinstein, Papadimitriou, G. Valiant and Livnat. Also connected to our topic is the creative work by program participants L. Valiant, Feldman, P. Valiant, Kanade and Angelino on the Probably Approximately Correct (PAC) framework in evolution, which allows for non-accidental mutation. While these works have begun to open up new frontiers, the most exciting parts of mathematizing this new view of evolution, based on mutation as information flow across loci and selection acting on genetic interactions, remain to be done.

For further reading:

On a new conceptual framework for evolution:

On the connection between theoretical computer science and evolutionary biology:

- Chastain, E., Livnat, A., Papadimitriou, C., and Vazirani, E. 2014. Algorithms, games and evolution. Proceedings of the National Academy of Sciences, USA, 111:10620–10623.

- Valiant, L. 2013. Probably Approximately Correct: Nature’s Algorithms for Learning and Prospering in a Complex World. Basic Books.

- Livnat, A. and Pippenger, N. 2006. An optimal brain can be composed of conflicting agents. Proceedings of the National Academy of Sciences, USA, 103:3198–3202.

Related Articles:

Letter from the Director

Profile of Luca Trevisan, the Simons Institute's Incoming Senior Scientist

From the Inside: Algorithmic Spectral Graph Theory

From the Inside: Algorithms and Complexity in Algebraic Geometry

Research Vignette: Quantum PCP Conjectures