Organizers’ Report: Algorithm Design, Law, and Policy Virtual Kick-Off

by Niva Elkin-Koren (University of Haifa), Michal Feldman (Tel Aviv University), Shafi Goldwasser (Simons Institute), and Inbal Talgam-Cohen (Technion - Israel Institute of Technology)

The appearance of Mark Zuckerberg before Congress in 2018 was a watershed moment in many ways; in particular, it demonstrated the extent to which algorithms now govern every aspect of our lives, as individuals and as a society. The gap between governance by legal, ethical, or social norms and algorithmic systems triggered the idea to hold an “AlgoLawPolicy” (Algorithm Design, Law, and Policy) workshop.

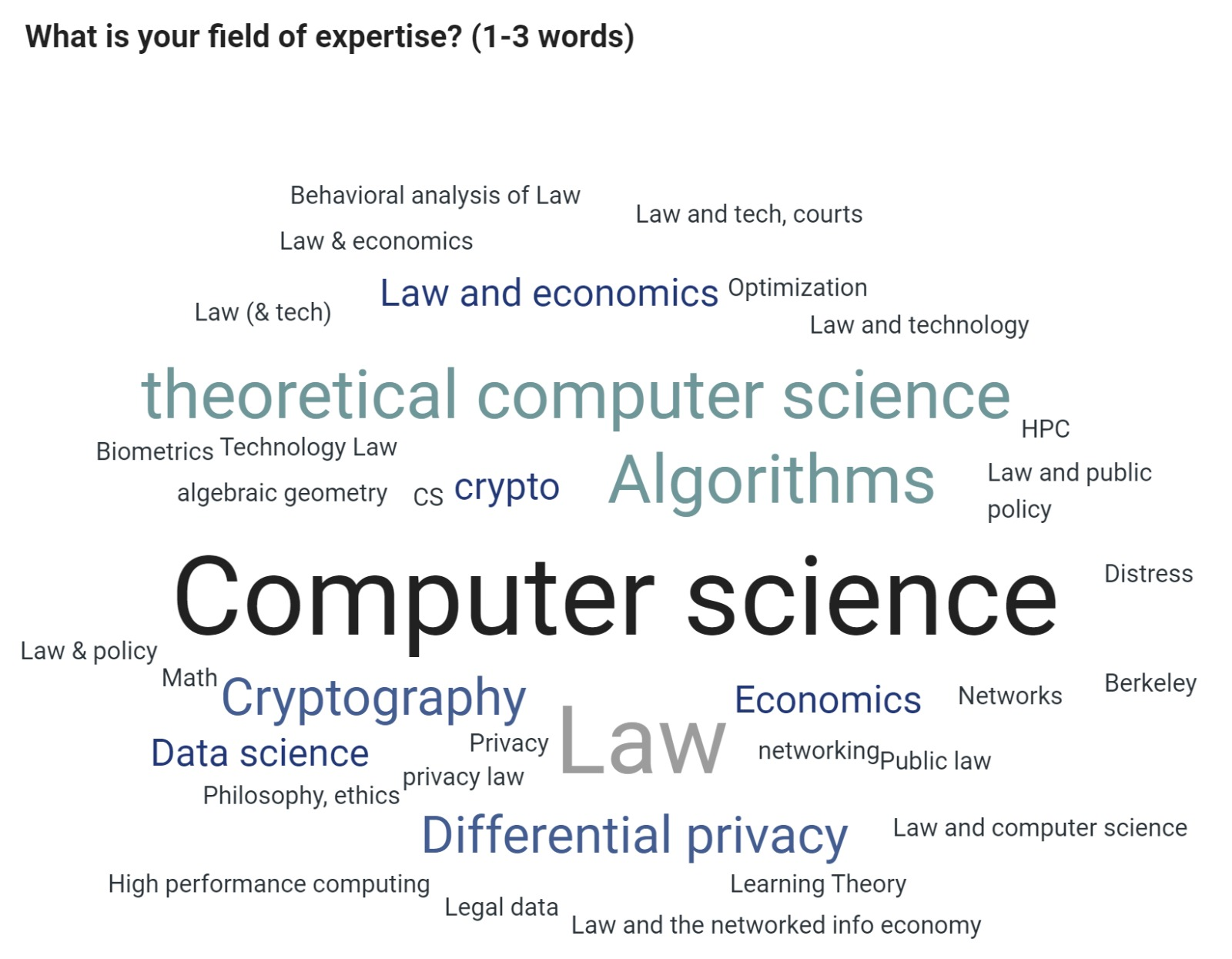

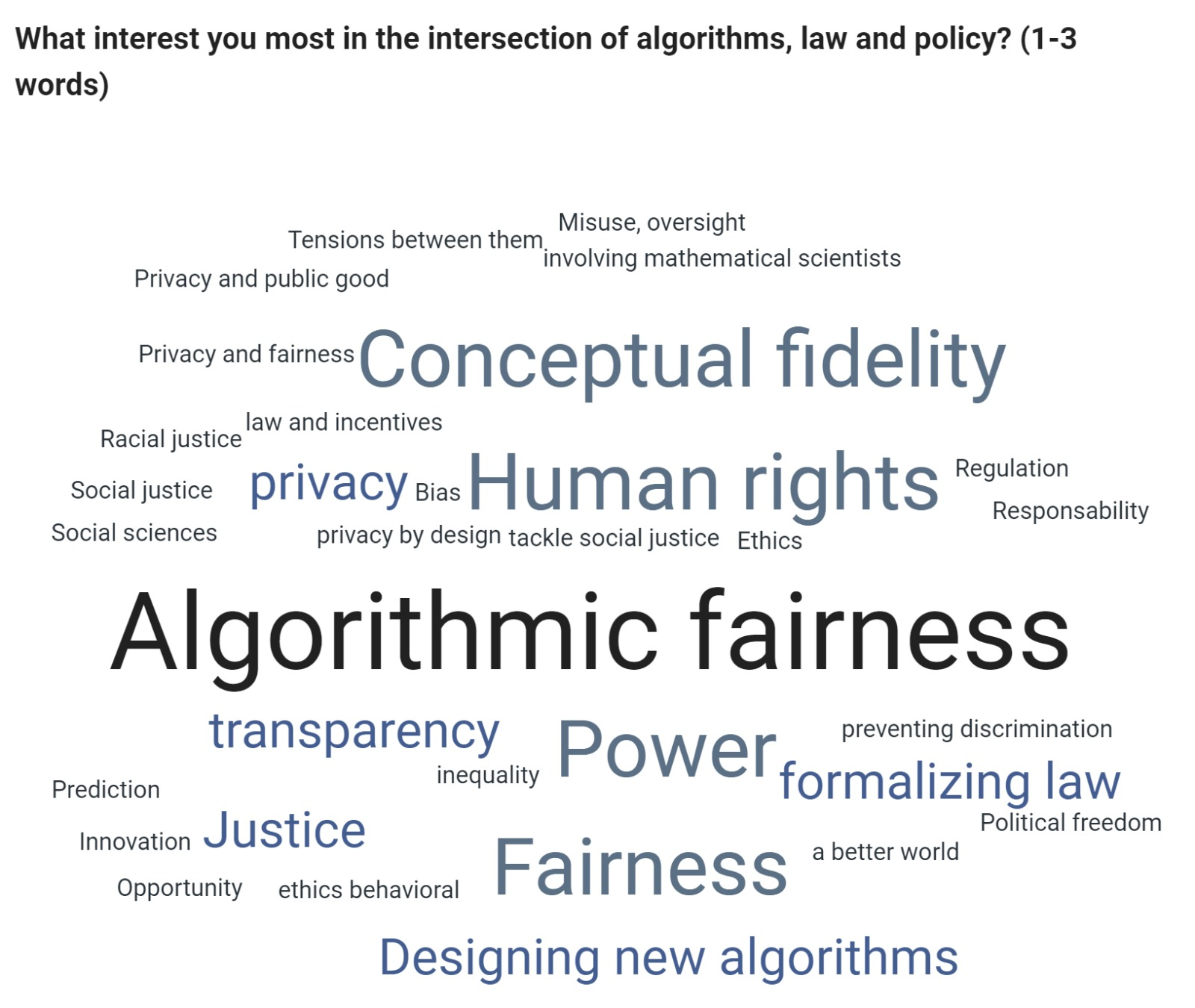

Our goal was to bring together researchers and scholars from diverse disciplines, including computer science, law, ethics, social science, and data science, to identify concrete research problems of joint interest and develop a shared language to study them as a community (see Figure 1). We saw great potential in merging decades of legal and philosophical thought with the tools and mathematical rigor of computational theory and practice. Here are a few of our impressions and insights from organizing this event.

Figure 1. Participants’ (self-reported) fields of expertise and what interests them most at the intersection of algorithms, law, and policy. (Word clouds created via Slido polling platform.)

The workshop was originally planned to take place in person at the Simons Institute. Due to COVID-19 restrictions, the physical workshop was postponed (currently planned for April 2021), and we decided to hold a two-day virtual “appetizer” event instead. The pandemic and the global lockdown have accelerated the transition to digital tools, which made our goal of strengthening and deepening the conversation among computer scientists, data scientists, social scientists, and legal scholars ever more urgent.

Content and schedule

In putting together the workshop’s schedule of talks and activities, we sought to facilitate in-depth cross-disciplinary conversation by tackling some practical challenges. The first day of the virtual event was dedicated to COVID-19 tracing apps as deployed by many countries around the world, reflecting different design choices that carry potential health, ethical, economic, and political implications. Keynote speaker Ron Rivest gave a talk on the state of the art in contact tracing, followed by commentator Helen Nissenbaum, who critically analyzed these design choices, applying an analytical framework of “Values at Play.” Four breakout rooms, each led by a computer scientist and a legal scholar, offered an opportunity for more in-depth discussions of specific policy challenges raised by COVID-19 tracing apps: Privacy Challenges, Public-Private Interface, Voluntary vs. Mandatory Dilemmas, and Accountability & Transparency. In the concluding keynote, Google’s chief economist, Hal Varian, spoke about short-term prediction (“nowcasting”) of COVID-19, with comments by Michal Gal on access barriers to data.

Triggered by the global George Floyd protests, the second day was dedicated to exploring ethical aspects of computing, AI, and social justice. Keynote speaker Rediet Abebe gave a thought-provoking talk about what roles, if any, computing can play to support and advance fundamental social change. Commentator Martha Minow argued that since the “master’s tools” will “never dismantle the master’s house,” new computational tools could be useful in advancing social justice. Next, a simulation exercise sought to demonstrate the ethical implications of specific design choices in content moderation AI intended for social media platforms. Jennifer Chayes chaired the concluding panel, which was awe-inspiring in its ambition and breadth, outlining her plans for Berkeley’s newly founded Division of Computing, Data Science, and Society. She did this through conversation with her “partners in crime” from CS, social welfare, sociology, medicine, and health policy, who shared their personal experiences in shaping policy by analyzing data algorithmically.

Formalizing vs. balancing

So what did we learn? One of the most salient takeaways was apparent immediately from the first session and resonated throughout the workshop: while Rivest viewed privacy as one of the least problematic aspects of the proposed contact tracing system, since it was built into the system (no geolocation, no data leave the phone without user consent), commentator Nissenbaum argued that privacy should not be absolute, but rather balanced against other competing values like public health or the need to build scientific knowledge of the pandemic. In the follow-up conversation, Kobbi Nissim asked whether the values Nissenbaum saw as competing were indeed so, suggesting that computational tools may enable having both privacy (formulated appropriately) and value extraction from data. Minow proposed to protect some aspects of privacy (like not being exposed to discriminatory treatment) through legal norms rather than through technology. Later in the event, Nissenbaum took her argument further, suggesting that the act of balancing values can offer a way out from CS impossibility results — e.g., contradictions between different formalizations of fairness.

Stepping/being pushed out of your comfort zone

The described discussion highlighted one of the biggest gaps between the disciplines: Computer scientists seek a model with an objective and constraints (“0.1 level of privacy”) to guide the algorithmic design task; they tend to view complementary tools, like rules, as exogenous to the task. Legal and social scholars seek a wide view, factoring in multiple objectives and taking into account many tools from the legal and social toolbox. We have noticed that when facing this gap, both groups seemed out of their comfort zones. Computer scientists may feel that widening the scope too much could interfere with innovation; they also view some of the wider considerations as ideological and/or political and may react with distrust. Legal scholars may feel trapped by the need to make binding design decisions up front when designing a system, rather than using an iterative, pluralist, case-by-case approach, and they view computing as just one (imperfect) tool that is not always the most adequate one. This insight further stresses the need to integrate the two perspectives into a comprehensive approach for tackling current challenges.

Collaboration and education

Our everyday life nowadays includes contact tracing apps, ML predictions, decision-making by dynamic AI systems, etc. This reality will increasingly push the relevant disciplines out of their comfort zones, expanding disciplinary boundaries whether we like it or not. So, what is required for the relevant disciplines to get ready for these new challenges and opportunities? The answer is probably a combination of education and research collaborations. We held the workshop’s two breakout sessions in smaller working groups in between the talks to create brainstorming opportunities and demonstrate what a joint hands-on academic activity might look like. In particular, the simulation (based on a Technion pilot led by Niva Elkin-Koren, Avi Gal, and Shlomi Hod) sought to demonstrate how the tackling of a practical challenge by mixed teams could be used as a pedagogical tool to expose some gaps in translation between the disciplines and to help bridge them. In the concluding panel, Abebe warned against “drive-by collaborations,” and other panel members described how they found it necessary to completely immerse themselves in another discipline in order to produce meaningful interdisciplinary research.

What participants thought

We’ve reported a few of the things we’ve learned from organizing the workshop, but more importantly, what did the participants feel they took away? We conclude with a small sample. Kira Goldner described going into the workshop expecting it to be focused on formalizing law (notable recent progress on this front includes notions like “singling out” [1] and the “right to be forgotten” [2]), but finding it to be much more focused on the power, impact, and implications of computing in society, and unexpected combinations of these. A common theme she saw in the talks was the various factors to consider before using computing as a solution. “My biggest takeaways were, while computing can help to digital contact-trace at scale or to nowcast COVID-19, we need to factor in privacy, and incentives, and the domain, and many other factors in order to be sure we’re using the right aspects of computing (or whether computing is the right tool at all).”

And Rann Smorodinsky suggested adding even more voices to the discussion: “I believe economists have gone down the path of figuring out how formal modeling is beneficial and harmful for social causes. It would be wise to have them in the loop.”

References

1. Aloni Cohen, Kobbi Nissim. “Towards Formalizing the GDPR's Notion of Singling Out.” Available at https://arxiv.org/abs/1904.06009. 2019.

2. Sanjam Garg, Shafi Goldwasser, Prashant Nalini Vasudevan. “Formalizing Data Deletion in the Context of the Right to Be Forgotten.” EUROCRYPT (2) 2020: 373-402.